Long Short Term Memory Networks (LSTMs)

LSTMs for Time-Series Forecasting

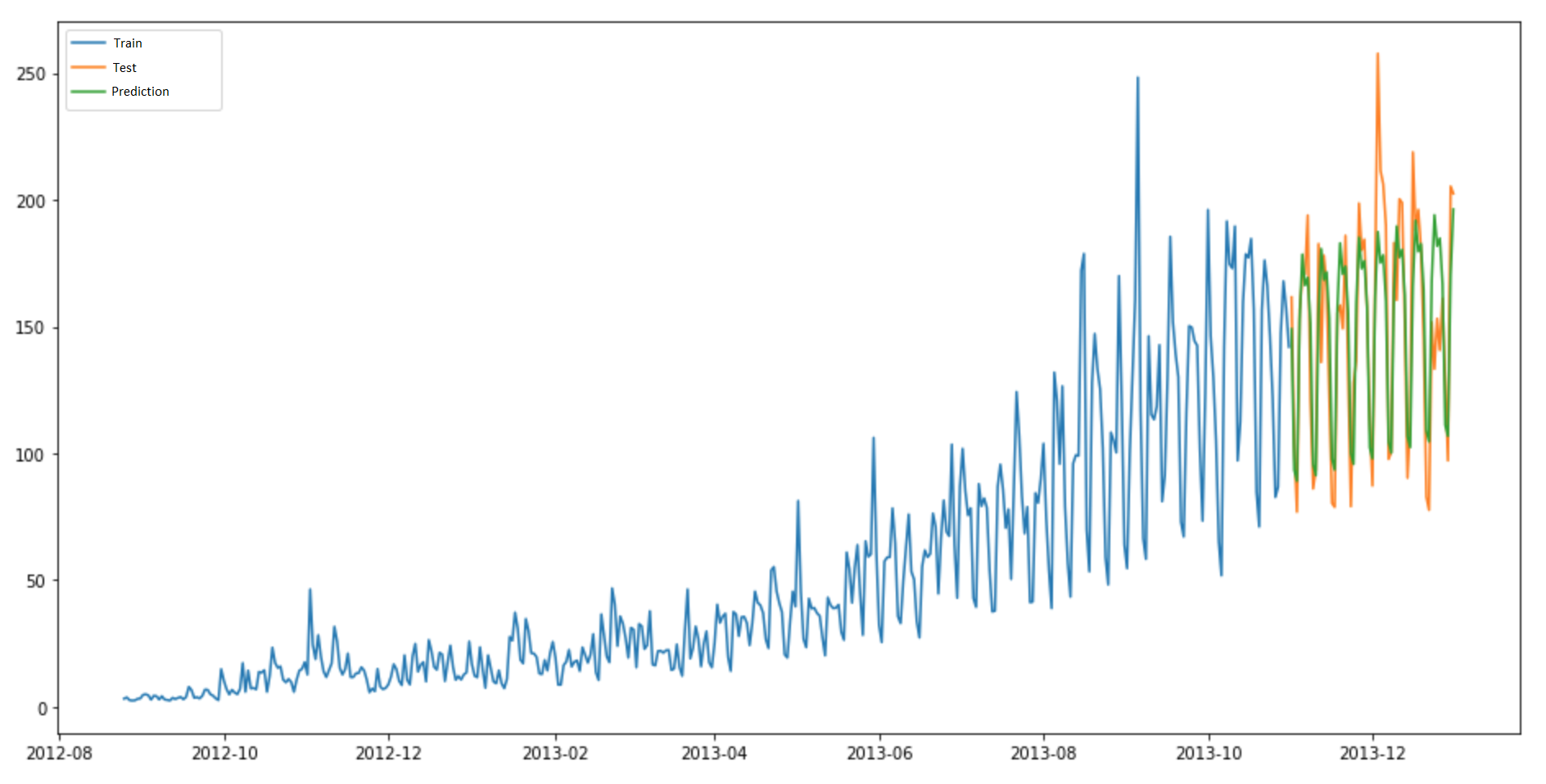

LSTMs for Time-Series Forecasting

Neural networks typically try to mimic human behavior, imagine reading a newspaper - you read an article that talks about a political organization and a person. Reading multiple lines of text, you gradually understand what the context is and are able to relate what person/ organization line 200 of the paragraph refers to. Recurrent neural networks are made to do just that, they "remember" sequence of information.

Recurrent Neural Networks

Recurrent Neural Networks (RNNs for short) are neural networks that have loops in them, thus helping them persist information.

Think of them as typical neural networks, but these pass information to multiple copies of themselves. Since they pass information sequential to multiple copies of themselves, they are ideal for learning sequences or learning order from data.

RNNs have been effectively used for applications like:

RNN Applications

- Speech recognition

- Information extraction

- Sentiment analysis

and many more...

If you think about the above use-cases, all of them require learning some sort of sequence or understanding context to make future decisions.

You might be thinking, hey Dweep isn't this article about LSTMs? Why not just use base RNNs for solving such problems? 🤔

Like any other flavor of neural networks, RNNs have their shortcomings too!

RNN Shortcomings

Lets go back to the example where you're reading an article from a newspaper (that too from a physical newspaper, now how rare is that! 😉). Assume the following lines from the article

"... as Martha proposed the bill in the house. She further in the statement proposed... "

The pronoun She can be inferred from the statement just before, which talks about Martha. RNNs in such scenarios work superbly, as pieces of relevant information is close together.

In cases where a long-term context is needed, RNNs perform poorly. Consider the below case

"The speaker hailed from a town called Santa Fe... New Mexico reportedly has ... "

For the above excerpt, consider that the gap between Santa Fe and New Mexica is huge. Given Sante Fe is a town from New Mexico, RNNs wouldn't be able to learn the relation and recognize the context. This is a problem of "long-term dependencies" (means exactly what it says).

LSTMs come to the rescue! 🤩

The mighty LSTMs

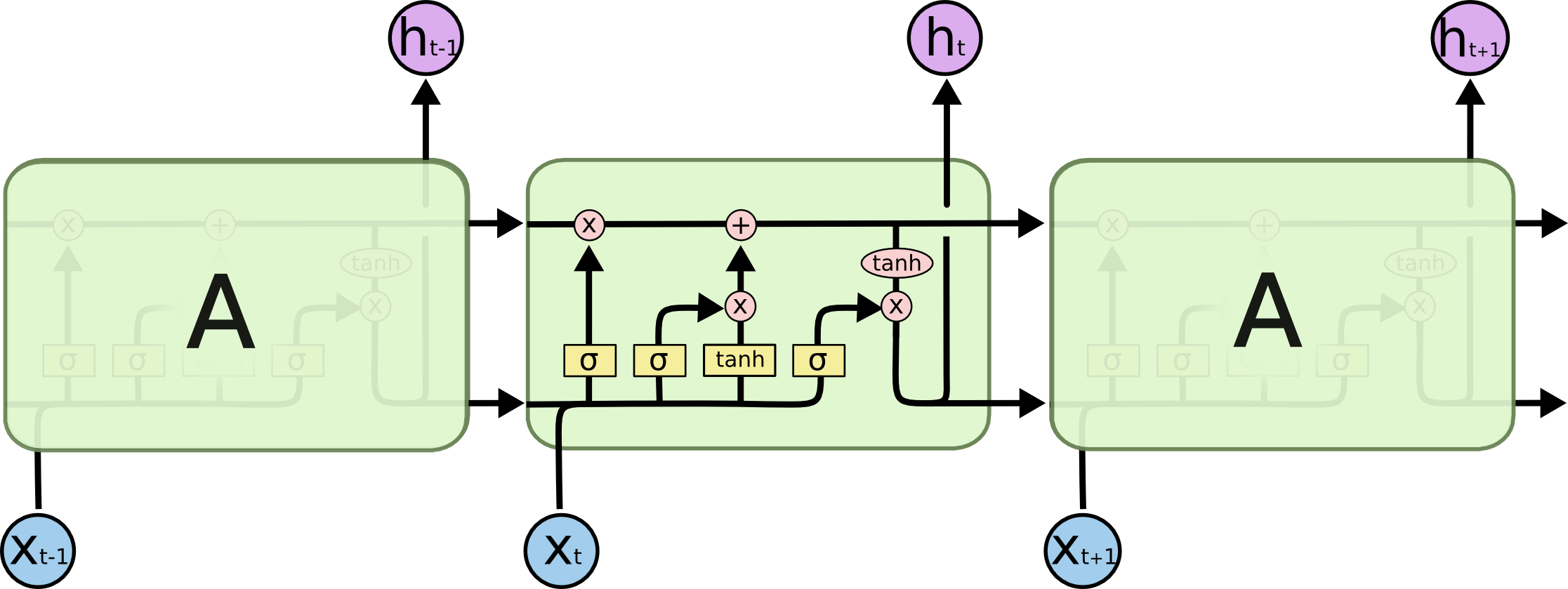

LSTM chain structure (Reference: Christopher Olah's amazing blog!)

LSTM chain structure (Reference: Christopher Olah's amazing blog!)

LSTMs have no problems learning information from long back, its essentially what they were architected for (introduced by Hochreiter & Schmidhuber (1997) ).

Since LSTMs are a form of RNNs they have a chain-like structure, as in the image above.

The boxes and circles in the green cell above is how LSTMs learn to retain/ forget information from varying time lengths. A combination of the yellow boxes (activation functions in neural network terms) and operators in the pink boxes form so called gates.

Thus, a single LSTM cell can be decomposed into 4 gates:

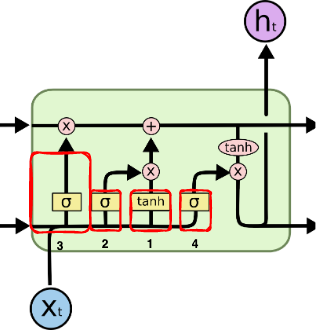

LSTM Cell Gates

LSTM Cell Gates

LSTM Gates

-

Forget Gate

Block 3 from above is the forget gate. The sigmoid layer helps decide what and how much (take note of the emphasis) to keep and not from the previous cell.

-

Input Gate

Block 2 from above is the input gate. Again, the sigmoid function helps decide which values will be updated.

-

Input Modulation Gate

Block 1 from above is the input modulation gate. The tanh layer generates new values, which together with the input gate output adds to the previous cell's input to form the current cell's input.

-

Output Gate

Block 4 from above is the output gate. The sigmoid decides what values to pass on to the next cell.

Check Christopher Olah's blog for more details.

Thats how LSTMs retain long/ short term information! ✨

Next, lets head on to a jupyter notebook where we see an LSTM in action ->